Qualcomm AI Rack-Scale Solutions Use LPDDR Mobile Memory to Compete with NVIDIA and AMD

As the tech landscape rapidly evolves, companies are increasingly vying for dominance in the artificial intelligence (AI) sector. Qualcomm, traditionally known for its mobile chips, is making a significant leap into AI with its latest innovations. By integrating mobile memory technology into AI chip solutions, Qualcomm hopes to carve out a niche that challenges industry giants like NVIDIA and AMD.

Qualcomm makes a bold move in AI chip technology

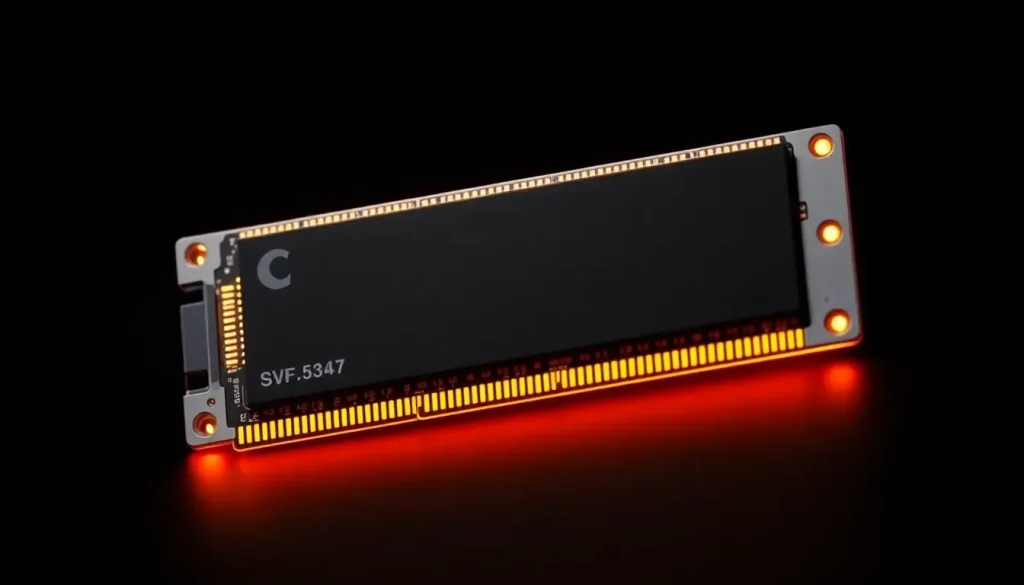

Qualcomm has taken a considerable step away from its mobile-centric history to introduce its latest AI chips, the AI200 and AI250. These chips are designed to create specialized rack-level AI inference solutions, a segment that has predominantly been the playground of established players such as NVIDIA and AMD. The strategic choice to utilize LPDDR mobile memory onboard sets Qualcomm apart, highlighting its innovative approach to AI infrastructure.

The AI200 and AI250 chips signify a pivotal moment for Qualcomm, as they aim to compete in an increasingly crowded field. The decision to pivot toward LPDDR memory instead of the traditional high-bandwidth memory (HBM) could reshape how companies think about AI inference. Qualcomm's strategy is built around reducing energy consumption and costs, factors that are becoming increasingly important in data center operations.

Exploring the advantages of LPDDR memory over HBM

LPDDR memory offers several benefits compared to HBM, which has long been the standard in high-performance computing environments. Here are some key advantages:

- Power efficiency: LPDDR memory consumes less power per bit, which can lead to significant energy savings in large-scale deployments.

- Cost-effectiveness: LPDDR memory is generally cheaper than modern HBM modules, making it a more accessible option for companies looking to implement AI solutions at scale.

- High memory density: LPDDR's design is particularly suited for inference operations, which often require substantial memory resources.

- Thermal efficiency: LPDDR generates less heat, enabling systems to operate more efficiently without extensive cooling solutions.

Despite these benefits, the decision to use LPDDR memory comes with trade-offs. Compared to HBM, LPDDR solutions typically exhibit lower memory bandwidth and higher latency due to narrower data interfaces. Additionally, the use of LPDDR could be less reliable in high-temperature environments that are common in data centers operating continuously.

Technical specifications and features of the AI200 and AI250

The AI200 and AI250 chips are engineered with several advanced features that bolster their performance in AI inference tasks. Key specifications include:

- Direct liquid cooling: This feature enhances thermal management, ensuring the chips maintain optimal operating temperatures.

- Support for PCIe/Ethernet protocols: This compatibility allows for seamless integration into existing data center infrastructures.

- Rack-level power consumption: The chips are designed to consume only 160 kW, a relatively low figure for modern AI solutions.

- Hexagon NPUs: These specialized processing units expand the chips' capabilities in handling complex inference operations and support advanced data formats.

While Qualcomm's advancements are impressive, they still face competition from established players. For instance, NVIDIA and AMD continue to lead with products designed primarily for high-performance training and inference workloads. Qualcomm's focus on LPDDR memory may limit the use of its solutions in more demanding applications.

The competitive landscape of AI inference solutions

The AI inference market is witnessing a surge in competition, as more companies recognize the immense potential for growth in this sector. Qualcomm's foray into this space is not an isolated incident; other tech giants are also launching their own solutions. Recent examples include:

- Intel's Crescent Island GPU, which is designed to handle large-scale inference workloads.

- NVIDIA's new Rubin CPX AI chip, aimed at enhancing inference capabilities in data centers.

These developments indicate that the inferencing segment of AI is gaining traction, drawing interest from various compute providers. Qualcomm's AI200 and AI250 chips represent a strategic response to this growing demand, offering a solution that emphasizes energy efficiency and cost-effectiveness.

As the competition heats up, it will be interesting to see how Qualcomm's innovations are received by the market. The initial responses from retailers have been optimistic, suggesting a promising future for Qualcomm in the AI landscape.

For a more visual insight into Qualcomm's new AI chip technology, check out this informative video:

The ongoing evolution of AI technologies and solutions will undoubtedly continue to transform industries and create new opportunities. As Qualcomm navigates this exciting terrain, its ability to innovate and adapt will be crucial in establishing its presence alongside established titans like NVIDIA and AMD.

Leave a Reply